Working with the gmprocess ASDF file¶

For browsing information in the HDF5/ASDF files output by gmprocess, the overview of the organizational structure in the Workspace section of the manual should be a useful reference.

Note that the StreamWorkspace object is essentially a gmprocess wrapper around the ASDF structure, and that ASDF is a specific HDF5 format developed for seismological data.

As such, it is possible to work with the ASDF file using the StreamWorkspace functions, the pyasdf library, or the h5py library.

Note

We recommend that users who are new to python and/or gmprocess should only use the StreamWorkspace interface, which handles a lot of the more confusing bookkeeping for accessing and arranging data from the various sections of the ASDF file (e.g., Waveforms, AuxiliaryData, and Provenance).

Only more advanced users should attempt to use the pyasdf and h5py interfaces.

The StreamWorkspace interface¶

First, some imports

from gmprocess.io.asdf.stream_workspace import StreamWorkspace

from gmprocess.utils.constants import DATA_DIR

And now we open an example ASDF file

# Path to example data

data_path = DATA_DIR / 'demo' / 'nc72282711' / 'workspace.h5'

workspace = StreamWorkspace.open(data_path)

Now, we will check to see what labels are present. There is typically the “unprocessed” label and one for the processed waveforms. The processed waveform label is “default” unless the user has set the label for the processed waveforms.

labels = workspace.get_labels()

print(labels)

['default', 'unprocessed']

It is generally possible to have multiple events in an ASDF file, but gmprocess follows a convention of having one event per ASDF file.

eventids = workspace.get_event_ids()

print(eventids)

['nc72282711']

If you want a StreamCollection object (see here for more info), the get_streams function constructs it from the workspace file

sc = workspace.get_streams('nc72282711', stations=['CE.68150'], labels=['default'])

sc.describe()

1 StationStreams(s) in StreamCollection:

3 StationTrace(s) in StationStream (passed):

CE.68150..HNE | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples (passed)

CE.68150..HNN | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples (passed)

CE.68150..HNZ | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples (passed)

The describe function prints a summary of the StreamCollection, which groups the StationTraces within StationStreams, gives each StationTrace’s identification information, and indicates if the StationStream/StationTrace is labeled as “passed” or “failed.

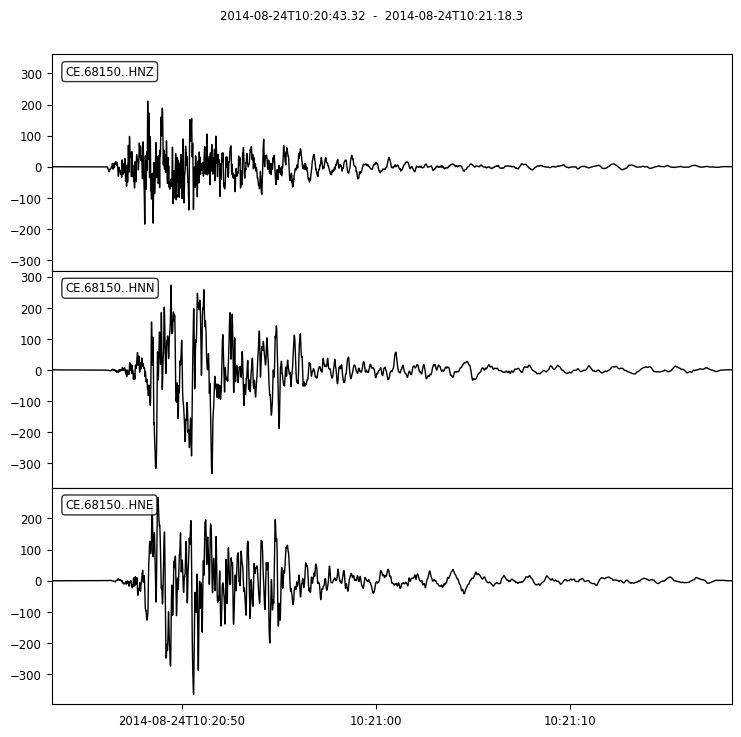

And the waveforms can be plotted by selecting the first (and only) StationStream with

One convenient aspect of a StreamCollection is that it includes StationStream objects, and it is easy to access metadata, including the provenance of the data. We can print it’s provenance information with the folling simple lines of code

print(sta_st[0].provenance.get_prov_dataframe())

Index Process Step Process Attribute Process Value

0 0 Detrend detrending_method linear

1 1 Detrend detrending_method demean

2 2 Remove Response input_units counts

3 2 Remove Response method remove_response

4 2 Remove Response output_units cm/s^2

5 2 Remove Response pre_filt_freqs (0.001, 0.005, 90.0, 100.0)

6 2 Remove Response water_level 60

7 3 Detrend detrending_method linear

8 4 Detrend detrending_method demean

9 5 Cut new_end_time 2014-08-24T10:21:18+00:00

10 5 Cut new_start_time 2014-08-24T10:20:43+00:00

11 6 Taper side both

12 6 Taper taper_width 0.05

13 6 Taper window_type Hann

14 7 Highpass Filter corner_frequency 0.041109969167523124

15 7 Highpass Filter filter_order 5

16 7 Highpass Filter filter_type Butterworth gmprocess

17 7 Highpass Filter number_of_passes 1

18 8 Lowpass Filter corner_frequency 40.0

19 8 Lowpass Filter filter_order 5

20 8 Lowpass Filter filter_type Butterworth gmprocess

21 8 Lowpass Filter number_of_passes 1

22 9 Detrend detrending_method pre

23 10 Detrend detrending_method baseline_sixth_order

You can also get the entire provenance document for all stations with

prov = workspace.get_provenance('nc72282711', labels=['default'])

print(prov)

Record Processing Step Step Attribute \

0 CE.68150..HNE_nc72282711_default Detrend detrending_method

1 CE.68150..HNE_nc72282711_default Detrend detrending_method

2 CE.68150..HNE_nc72282711_default Remove Response input_units

3 CE.68150..HNE_nc72282711_default Remove Response method

4 CE.68150..HNE_nc72282711_default Remove Response output_units

.. ... ... ...

67 CE.68150..HNZ_nc72282711_default Lowpass Filter filter_order

68 CE.68150..HNZ_nc72282711_default Lowpass Filter filter_type

69 CE.68150..HNZ_nc72282711_default Lowpass Filter number_of_passes

70 CE.68150..HNZ_nc72282711_default Detrend detrending_method

71 CE.68150..HNZ_nc72282711_default Detrend detrending_method

Attribute Value

0 linear

1 demean

2 counts

3 remove_response

4 cm/s^2

.. ...

67 5

68 Butterworth gmprocess

69 1

70 pre

71 baseline_sixth_order

[72 rows x 4 columns]

Also, the printing dictionaries in python are not very readable in some cases. So we can define a convenience function for this tutorial to make them easier to view

import json

import numpy as np

class NumpyEncoder(json.JSONEncoder):

def default(self, obj):

if isinstance(obj, np.ndarray):

return obj.tolist()

return json.JSONEncoder.default(self, obj)

def print_dict(jdict):

lines = json.dumps(jdict, indent=2, cls=NumpyEncoder).split("\n")

for line in lines:

print(line)

Note that the NumpyEncoder class is a solution from from stackverflow by user karlB.

If you want to inspect the rupture object for the event, it can be accessed with

rupt = workspace.get_rupture('nc72282711', label='default')

print_dict(rupt)

{

"cells": [

[

0,

1,

2,

3

]

],

"vertices": [

[

-122.31300000000005,

38.2200000000003,

1.9999999999739229

],

[

-122.33300000000003,

38.31000000000029,

1.9999999999748543

],

[

-122.33299988608721,

38.31000001574452,

10.99999999917671

],

[

-122.31300000000005,

38.22000000000898,

10.999999999215827

]

],

"description": "Source: Jack Boatwright's interpolation of Doug Dreger's finite fault",

"reference": "Source: Jack Boatwright's interpolation of Doug Dreger's finite fault"

}

We can see that the rupture is represented as a dictionary with keys of “cells”, “description”, “reference”, and “vertices”. In this case, we can see that this rupture extent was created by Jack Boatwright based on a slip inversion from Doug Dreger. These are the verticies that are used for computing finite source distances.

The config file that was used for this event is a dictionary stored as a

ruamel object

that can be access as the config attribute to the workspace file.

print(f"{type(workspace.config)=}")

conf_dict = dict(workspace.config)

print(f"{conf_dict.keys()=}")

type(workspace.config)=<class 'ruamel.yaml.comments.CommentedMap'>

conf_dict.keys()=dict_keys(['fetchers', 'read', 'windows', 'check_stream', 'processing', 'colocated', 'duplicate', 'build_report', 'metrics', 'gmm_selection', 'integration', 'differentiation', 'pickers', 'error_notification', 'strec', 'version', 'user'])

Here, we only print the “metrics” section to keep things simple.

print_dict(workspace.config["metrics"])

{

"components_and_types": {

"channels": [

"pga",

"pgv",

"sa",

"cav",

"duration"

],

"rotd": [

"pga"

],

"geometric_mean": [],

"quadratic_mean": []

},

"component_parameters": {

"rotd": {

"percentiles": [

50.0

]

}

},

"type_parameters": {

"sa": {

"damping": [

0.05

],

"periods": [

0.3,

1.0,

3.0

]

},

"fas": {

"smoothing_method": "konno_ohmachi",

"smoothing_parameter": 20.0,

"allow_nans": true,

"frequencies": {

"start": 0.001,

"stop": 100.0,

"num": 401

}

},

"duration": {

"intervals": [

"5-75",

"5-95"

]

}

}

}

There are multiple ways to access the metrics from the StreamWorkspace. For this tutorial, we will use the “WaveformMetricCollection” class:

from gmprocess.metrics.waveform_metric_collection import WaveformMetricCollection

wmc = WaveformMetricCollection.from_workspace(workspace, label='default')

print(wmc)

WaveformMetricCollection: 1 stations

Printing the WaveformMetricCollection object simply indicates that there is one station available in the small dataset for this tutorial. We can see the attributes of the object that may be useful with

wmc.__dict__.keys()

dict_keys(['waveform_metrics', 'stream_metadata', 'stream_paths'])

Let’s inspect the waveform_metrics attribute further.

First, we can see that it is a list with length one

print(f"{type(wmc.waveform_metrics)=}")

print(f"{len(wmc.waveform_metrics)=}")

type(wmc.waveform_metrics)=<class 'list'>

len(wmc.waveform_metrics)=1

The length is one because we only have one station in this dataset, and the elements of waveform_metrics list have a one-to-one correspondance to the stations.

And each element of the waveform_metrics list is a WaveformMetricList object:

print(f"{type(wmc.waveform_metrics[0])=}")

type(wmc.waveform_metrics[0])=<class 'gmprocess.metrics.waveform_metric_list.WaveformMetricList'>

So let’s select the first WaveformMetricList object and look at it

wml = wmc.waveform_metrics[0]

print(wml)

8 metric(s) in list:

PGA: Channels(component=z)=21.517, Channels(component=h1)=37.279, Channels(component=h2)=33.892, RotD(percentile=50.0)=34.268

PGV: Channels(component=z)=18.916, Channels(component=h2)=55.183, Channels(component=h1)=56.846

...

Duration(5-95): Channels(component=h1)=7.265, Channels(component=h2)=7.235, Channels(component=z)=8.965

This summary indicates that there are 8 metrics in the WaveformMetricList for this station. It also gives a quick summary of what each metric is, such as PGA. For each metric, there are different values for each component (e.g., the as-recorded channels vs RotD50).

The constituent data within the WaveformMetricList is in the metric_list attribute

print(f"{type(wml.metric_list)=}")

print(wml.metric_list)

type(wml.metric_list)=<class 'list'>

[PGA: Channels(component=z)=21.517, Channels(component=h1)=37.279, Channels(component=h2)=33.892, RotD(percentile=50.0)=34.268, PGV: Channels(component=z)=18.916, Channels(component=h2)=55.183, Channels(component=h1)=56.846, SA(T=0.3000, D=0.050): Channels(component=h1)=77.004, Channels(component=z)=39.549, Channels(component=h2)=71.425, SA(T=1.0000, D=0.050): Channels(component=z)=22.032, Channels(component=h1)=46.442, Channels(component=h2)=55.060, SA(T=3.0000, D=0.050): Channels(component=h1)=13.164, Channels(component=h2)=12.684, Channels(component=z)=6.277, CAV: Channels(component=h1)=0.869, Channels(component=h2)=0.900, Channels(component=z)=0.475, Duration(5-75): Channels(component=h1)=4.420, Channels(component=h2)=2.990, Channels(component=z)=4.555, Duration(5-95): Channels(component=h1)=7.265, Channels(component=h2)=7.235, Channels(component=z)=8.965]

Each element in this list is a WaveformMetricType subclass

print(f"{type(wml.metric_list[0])=}")

type(wml.metric_list[0])=<class 'gmprocess.metrics.waveform_metric_type.PGA'>

You can learn more about these classes and their attributes by browsing the source code here. But for this tutorial, we will just convert them to a dictionary

pga_dict = wml.metric_list[0].to_dict()

print(pga_dict)

{'values': [37.278592, 33.891816, 21.516983, 34.267543], 'components': [Channels(component=h1), Channels(component=h2), Channels(component=z), RotD(percentile=50.0)], 'type': 'PGA', 'format_type': 'pgm', 'units': '%g', 'metric_attributes': {}, 'component_to_channel': {'H1': 'H1', 'H2': 'H2', 'Z': 'Z'}}

So, if we want to select the SA for the “h1” component for all of the available periods, we have to write a loop to collect the values

sa = []

period = []

for metric in wml.metric_list:

if metric.type != "SA":

# We only want SA

continue

mdict = metric.to_dict()

for comp, val in zip(mdict["components"], mdict["values"]):

if comp.component_attributes["component_string"] == "h1":

sa.append(val)

period.append(mdict["metric_attributes"]["period"])

print(f"{period=}")

print(f"{sa=}")

period=[0.3, 1.0, 3.0]

sa=[77.00425, 46.442114, 13.164146]

And here you can see that there are only three periods conigured for the spectral acceleration calculation. If a larger number of periods were specified in the config file, it would likely be useful to plot the SA as a fuction of period to see the response spectrum.

The pyasdf interface¶

The ASDF data structure can be accessed directly from the StreamWorkspace object via the dataset attribute.

Here, we print a summary of the ASDF object

ds = workspace.dataset

print(ds)

ASDF file [format version: 1.0.3]: '../../../venv/lib/python3.12/site-packages/gmprocess/data/demo/nc72282711/workspace.h5' (2.0 MB)

Contains 1 event(s)

Contains waveform data from 1 station(s).

Contains 10 type(s) of auxiliary data: Cache, RuptureModels, StationMetrics, StreamProcessingParameters, StreamSupplementalStats, StrecParameters, TraceProcessingParameters, WaveFormMetrics, config, gmprocess_version

Alternatively, the ASDF object can be created directly with the pyasdf library

import pyasdf

ds = pyasdf.ASDFDataSet(data_path)

print(ds)

ASDF file [format version: 1.0.3]: '../../../venv/lib/python3.12/site-packages/gmprocess/data/demo/nc72282711/workspace.h5' (2.0 MB)

Contains 1 event(s)

Contains waveform data from 1 station(s).

Contains 10 type(s) of auxiliary data: Cache, RuptureModels, StationMetrics, StreamProcessingParameters, StreamSupplementalStats, StrecParameters, TraceProcessingParameters, WaveFormMetrics, config, gmprocess_version

It is easy to get a list of the stations in the dataset

station_list = ds.waveforms.list()

print(f"{station_list=}")

station_list=['CE.68150']

You can retrieve an obspy stream from the ASDF file by browsing the waveforms with knowledge of the stations, event ID, and labels. Note that ASDF uses a “tag” that combines the event ID and label.

station = station_list[0]

event_id = "nc72282711"

label = "default"

tag = f"{event_id}_{label}"

st = ds.waveforms[station][tag]

print(st)

st.plot();

3 Trace(s) in Stream:

CE.68150..HNE | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples

CE.68150..HNN | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples

CE.68150..HNZ | 2014-08-24T10:20:43.320000Z - 2014-08-24T10:21:18.300000Z | 200.0 Hz, 6997 samples

The pyasdf library provides a lot more functionality than this, and we refer users to their documentation for additional details and examples.

The h5py interface¶

Of the three options that we discuss in this tutorial, the “lowest level” interface to the ASDF file is to read it in as an HDF5 object:

import h5py

h5 = h5py.File(data_path, "r+")

print(h5)

<HDF5 file "workspace.h5" (mode r+)>

The HDF5 standard is extremely flexible and is composed of three essential ingredients: “datasets”, “groups”, and “attributes”. Datasets are multidimensional arrays, groups are conceptually similar to directories on a file system or dictionary keys, and attributes are metadata associated with datasets or groups. The format is hierarchical because groups can contain other groups and/or datasets.

You can use the keys method to see group keys:

h5.keys()

<KeysViewHDF5 ['AuxiliaryData', 'Provenance', 'QuakeML', 'Waveforms']>

To demonstrate how to access the value of a group key, we will use the QuakeML entry:

print(h5["QuakeML"])

<HDF5 dataset "QuakeML": shape (827,), type "|i1">

And we can see that this is a dataset.

To see the data in the dataset we can use the [:] operator:

print(h5["QuakeML"][:])

[ 60 63 120 109 108 32 118 101 114 115 105 111 110 61 39 49 46 48

39 32 101 110 99 111 100 105 110 103 61 39 117 116 102 45 56 39

63 62 10 60 113 58 113 117 97 107 101 109 108 32 120 109 108 110

115 61 34 104 116 116 112 58 47 47 113 117 97 107 101 109 108 46

111 114 103 47 120 109 108 110 115 47 98 101 100 47 49 46 50 34

32 120 109 108 110 115 58 113 61 34 104 116 116 112 58 47 47 113

117 97 107 101 109 108 46 111 114 103 47 120 109 108 110 115 47 113

117 97 107 101 109 108 47 49 46 50 34 62 10 32 32 60 101 118

101 110 116 80 97 114 97 109 101 116 101 114 115 32 112 117 98 108

105 99 73 68 61 34 115 109 105 58 108 111 99 97 108 47 97 54

98 97 101 57 52 97 45 53 52 51 102 45 52 54 54 57 45 56

100 53 100 45 57 54 52 100 50 48 48 98 102 52 49 99 34 62

10 32 32 32 32 60 101 118 101 110 116 32 112 117 98 108 105 99

73 68 61 34 115 109 105 58 108 111 99 97 108 47 110 99 55 50

50 56 50 55 49 49 34 62 10 32 32 32 32 32 32 60 111 114

105 103 105 110 32 112 117 98 108 105 99 73 68 61 34 115 109 105

58 108 111 99 97 108 47 110 99 55 50 50 56 50 55 49 49 34

62 10 32 32 32 32 32 32 32 32 60 116 105 109 101 62 10 32

32 32 32 32 32 32 32 32 32 60 118 97 108 117 101 62 50 48

49 52 45 48 56 45 50 52 84 49 48 58 50 48 58 52 52 46

48 55 48 48 48 48 90 60 47 118 97 108 117 101 62 10 32 32

32 32 32 32 32 32 60 47 116 105 109 101 62 10 32 32 32 32

32 32 32 32 60 108 97 116 105 116 117 100 101 62 10 32 32 32

32 32 32 32 32 32 32 60 118 97 108 117 101 62 51 56 46 50

49 53 49 54 54 55 60 47 118 97 108 117 101 62 10 32 32 32

32 32 32 32 32 60 47 108 97 116 105 116 117 100 101 62 10 32

32 32 32 32 32 32 32 60 108 111 110 103 105 116 117 100 101 62

10 32 32 32 32 32 32 32 32 32 32 60 118 97 108 117 101 62

45 49 50 50 46 51 49 50 51 51 51 51 60 47 118 97 108 117

101 62 10 32 32 32 32 32 32 32 32 60 47 108 111 110 103 105

116 117 100 101 62 10 32 32 32 32 32 32 32 32 60 100 101 112

116 104 62 10 32 32 32 32 32 32 32 32 32 32 60 118 97 108

117 101 62 49 49 49 50 48 46 48 60 47 118 97 108 117 101 62

10 32 32 32 32 32 32 32 32 60 47 100 101 112 116 104 62 10

32 32 32 32 32 32 60 47 111 114 105 103 105 110 62 10 32 32

32 32 32 32 60 109 97 103 110 105 116 117 100 101 32 112 117 98

108 105 99 73 68 61 34 115 109 105 58 108 111 99 97 108 47 110

99 55 50 50 56 50 55 49 49 34 62 10 32 32 32 32 32 32

32 32 60 109 97 103 62 10 32 32 32 32 32 32 32 32 32 32

60 118 97 108 117 101 62 54 46 48 50 60 47 118 97 108 117 101

62 10 32 32 32 32 32 32 32 32 60 47 109 97 103 62 10 32

32 32 32 32 32 32 32 60 116 121 112 101 62 109 119 60 47 116

121 112 101 62 10 32 32 32 32 32 32 60 47 109 97 103 110 105

116 117 100 101 62 10 32 32 32 32 60 47 101 118 101 110 116 62

10 32 32 60 47 101 118 101 110 116 80 97 114 97 109 101 116 101

114 115 62 10 60 47 113 58 113 117 97 107 101 109 108 62 10]

And it is not immediately clear how to interpret these numbers. While pyasdf has functions for more easily parsing the data, if we are using the h5py objects it is useful to use the ASDF definition to help us understand how to parse the data. From the ASDF definition, we see that the QuakeML dataset is a binary dump of a QuakeML file.

So we can create an in-memory binary stream of the data with the io library

import io

bytes = io.BytesIO(h5["QuakeML"][:])

And then we can hand this off to obspy’s read_events method

import obspy

catalog = obspy.read_events(bytes)

print(catalog)

1 Event(s) in Catalog:

2014-08-24T10:20:44.070000Z | +38.215, -122.312 | 6.02 mw

The list of events is given as an attribute to the catalog

print(catalog.events)

[Event(resource_id=ResourceIdentifier(id="smi:local/nc72282711"))]

We can then select the only event in the list

event = catalog.events[0]

print(f"{type(event)=}")

print(event)

type(event)=<class 'obspy.core.event.event.Event'>

Event: 2014-08-24T10:20:44.070000Z | +38.215, -122.312 | 6.02 mw

resource_id: ResourceIdentifier(id="smi:local/nc72282711")

---------

origins: 1 Elements

magnitudes: 1 Elements

And Event object many attributes and we may be primarily interested in the magnitudes and origins:

event = catalog.events[0]

print(event.magnitudes)

print(event.origins)

[Magnitude(resource_id=ResourceIdentifier(id="smi:local/nc72282711"), mag=6.02, magnitude_type='mw')]

[Origin(resource_id=ResourceIdentifier(id="smi:local/nc72282711"), time=UTCDateTime(2014, 8, 24, 10, 20, 44, 70000), longitude=-122.3123333, latitude=38.2151667, depth=11120.0)]

And finally, we may want to access the latitutde, longitude, depth, and magnitude of the earthquake:

magnitude = event.magnitudes[0]

origin = event.origins[0]

print(f"{magnitude.mag=}")

print(f"{origin.longitude=}")

print(f"{origin.latitude=}")

print(f"{origin.depth=}")

magnitude.mag=6.02

origin.longitude=-122.3123333

origin.latitude=38.2151667

origin.depth=11120.0